🚀 Running GPTStonks Chat Community Edition (CE) on Your Personal Computer¶

Hey stock enthusiasts! 📈 Ready to unleash the power of GPTStonks Chat Community Edition (CE) on your humble PC? We've got you covered! In this guide, we'll walk you through the steps. Let's dive in! 🚀

What GPTStonks Chat CE Can Do for You?¶

GPTStonks Chat CE is your financial wizard, offering a magical blend of functionalities:

- 🌐 Analyze the web: Fetch and analyze the latest info about companies, states, investors, and more.

- 🕵️♂️ Trace the info: Understand how the data is retrieved, making debugging a breeze.

- 📊 Get historical data: Visualize financial data via OpenBB (OpenBB account needed).

- 📈 Create awesome plots: Auto-plot historical price data using cool candle charts.

- 🧐 Perform complex analysis: Ask complex queries like stock overviews, sentiment analysis, or latest news.

- 🤖 Customize the agent: Integrate any LLM in LangChain and LlamaIndex for supercharged performance.

- 🧰 Expand your tools: Seamlessly integrate custom or pre-existing tools.

The future of finance with AI is closer than ever!

Meet the GPTStonks Chat CE Trio¶

GPTStonks Chat CE flaunts a microservice trio:

-

💻 Frontend: Crafted in React, featuring the chatbot and charts powered by TradingView's Lightweight Charts. Get it on GitHub Packages and DockerHub.

-

🚀 Backend (API): Python-powered with FastAPI, running a LangChain agent that decides which tools to use. Grab it on GitHub Packages and DockerHub.

-

🗃️ Database: Open-source MongoDB is readily available on DockerHub, standing ready to manage essential provider tokens (PATs), presently focusing on OpenBB's.

Running GPTStonks Chat CE with Docker Compose  ¶

¶

Configuration  ¶

¶

Preferred way: cloning with git¶

If you already have git installed, you can obtain the configuration files by cloning GPTStonks' API repository:

.

. Alternative way: creating the configuration files¶

How to install Docker Compose

To install Docker Compose, follow the official installation guide.

Getting this trio up and running is a breeze with Docker Compose. Just head over to your local directory, create a new file called docker-compose.yaml, and include the following content:

version: "3.8"

services:

api:

image: gptstonks/api:latest

ports:

- "8000:8000"

env_file:

- .env.template # env variables to customize GPTStonks Chat CE (required)

volumes:

- ${LOCAL_LLM_PATH}:/api/gptstonks_api/zephyr-7b-beta.Q4_K_M.gguf # LLM (required)

frontend:

image: gptstonks/front-end:latest

ports:

- "3000:80"

mongo:

image: mongo

ports:

- "27017:27017"

environment:

- MONGO_INITDB_DATABASE=mongodb

volumes:

- mongo-data:/data/db

- ./mongo-init.js:/docker-entrypoint-initdb.d/mongo-init.js:ro # MongoDB initialization script (required)

volumes:

mongo-data:

Furthermore, a brief script is necessary to initialize the database with the appropriate structure. To complete this task, create a file named mongo-init.js and include the provided code.

db = db.getSiblingDB('mongodb');

db.tokens.insertOne({

description: "Initial document"

});

Finally, you'll need to set up some environment variables to customize GPTStonks Chat CE. To do this with the Docker Compose provided earlier, simply store these variables in a file named .env.template. Here's an example for reference:

# Uncomment to run with debugging

DEBUG_API=1

# ID of the embedding model to use

AUTOLLAMAINDEX_EMBEDDING_MODEL_ID="local:BAAI/bge-base-en-v1.5"

# LLM to use. Format provider:model_id, where model_id is dependent on the provider.

# Example provided with local LLM using llama.cpp.

# Model downloaded from https://huggingface.co/TheBloke/zephyr-7B-beta-GGUF.

LLM_MODEL_ID="llamacpp:./gptstonks_api/zephyr-7b-beta.Q4_K_M.gguf"

# Context window when using llama.cpp models

LLM_LLAMACPP_CONTEXT_WINDOW=8000

AUTOLLAMAINDEX_LLM_CONTEXT_WINDOW=8000

# Randomness in the sampling of the posterior of the LLM

# 0 - greedy sampling, 1 - posterior without modification

LLM_TEMPERATURE=0

# Max tokens to sample from LLM

LLM_MAX_TOKENS=512

# Description of the OpenBB chat tool

OPENBBCHAT_TOOL_DESCRIPTION="useful to get financial and investing data. Input should be the concrete data to retrieve, in natural language."

# Path to the Vector Store Index (VSI).

# The VSI is already present in the Docker image of the API.

AUTOLLAMAINDEX_VSI_PATH="vsi:./gptstonks_api/data/openbb_v4.1.0_historical_vectorstoreindex_bgebaseen"

# Includes the model format

CUSTOM_GPTSTONKS_PREFIX="<|system|>\n</s>\n<|user|>\nYou are a helpful and useful financial assistant.\n\nTOOLS:\n------\n\nYou have access to the following tools:"

# Memory is removed by default

CUSTOM_GPTSTONKS_SUFFIX="Begin! New input: {input} {agent_scratchpad}</s>\n<|assistant|>"

# Includes the model format in the default QA template

CUSTOM_GPTSTONKS_QA="<|system|>\n</s>\n<|user|>\nContext information is below.\n---------------------\n{context_str}\n---------------------\nGiven the context information and not prior knowledge, answer the query.\nQuery: {query_str}\nAnswer:</s>\n<|assistant|>"

# Template for the QA (Question-Answer) format

AUTOLLAMAINDEX_QA_TEMPLATE="<|system|>\n</s>\n<|user|>\nYou must write Python code to solve the query '{query_str}'. You must use only one of the functions below and store its output in a variable called `res`.\n---------------------\n{context_str}\n---------------------\nWrite the Python code between '```python' and '```', using only one of the functions above. Do not use `print`.</s>\n<|assistant|>"

# Disable hybrid retriever, only vector search

AUTOLLAMAINDEX_NOT_USE_HYBRID_RETRIEVER=1

# MongoDB URI

MONGO_URI=mongodb://mongo:27017

# MongoDB database name

MONGO_DBNAME=mongodb

Large language model selection  ¶

¶

In this tutorial, the selected model is Zephyr 7B Beta - GGUF. Download it with huggingface-cli:

huggingface-cli download TheBloke/zephyr-7B-beta-GGUF zephyr-7b-beta.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

How to install huggingface-cli

huggingface-cli can be installed in your Python environment using pip (from HuggingFace's docs):

Once you've successfully downloaded the model, you'll want to mount it into /api/gptstonks_api/zephyr-7b-beta.Q4_K_M.gguf. This is crucial, as the environment variable LLM_MODEL_ID specified in .env.template references this path within the container. To achieve this, set the LOCAL_LLM_PATH to the local directory where the model is stored before running docker compose up, like so:

Using a different model

Considering a different model? No worries – it's as easy as adjusting the LLM_MODEL_ID in the .env.template file to the name of your preferred model. After that, a simple command:

And you're good to go! 🚀

If you're leaning towards using closed LLMs, like OpenAI, the process is straightforward with GPTStonks Chat CE. Just insert a valid OPENAI_API_KEY, and set LLM_MODEL_ID to openai:<model-name> (e.g., openai:gpt-3.5-turbo-1106).

A quick heads-up: different models may perform best with specific prompt structures. Keep that in mind for optimal results! 👍💻

Starting GPTStonks Chat CE¶

When Docker Compose is initiated using the command docker compose up, it takes a few minutes to download all the necessary images. After this process is complete, the environment automatically starts. Upon observing the message:

as provided by the API in the Docker Compose output, you can access GPTStonks Chat CE by navigating to localhost:3000 in your web browser. The webpage will display content similar to the following:

Success

🌟 Congratulations! You've got GPTStonks Chat CE running on your PC. 🌟

Chatting with GPTStonks Chat CE: Let the Fun Begin! 🎉¶

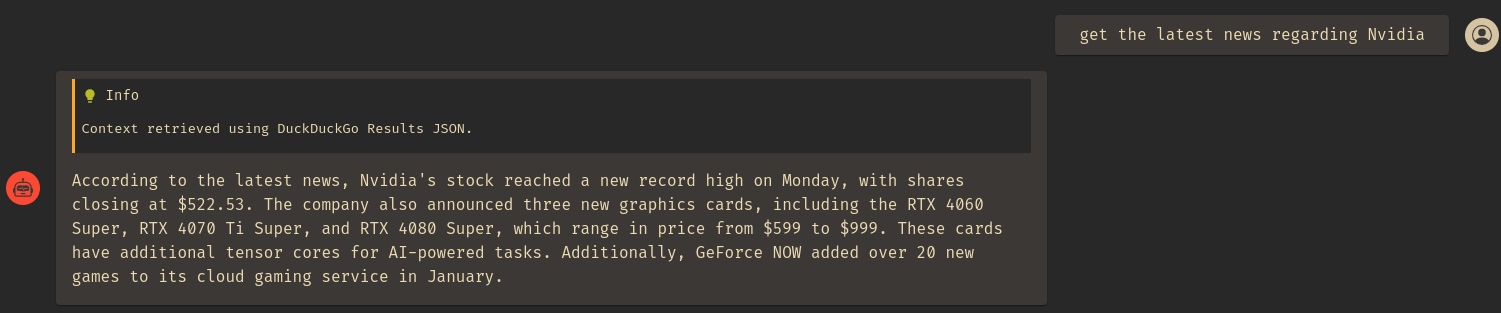

Now for the fun part! Head over to localhost:3000 and witness the magic. Throw any financial query at it, and let the chatbot impress you. Here's a peek when asked about Nvidia's latest news:

Great! The news is summarized, and a header is added for transparency to ensure the data's source is well-known. Additionally, we can inquire about more specific financial data. Currently, OpenBB is the sole provider for this purpose, but there are plans to incorporate additional providers in the future.

To use OpenBB as external provider, first create your account following their instructions. Then, set up the API keys of your financial data providers (e.g., Financial Modelling Prep, Polygon, etc.) in OpenBB Platform's API keys section. Most of them have free plans! Finally, we can set up our OpenBB PAT in GPTStonks Chat CE by going to the API Keys section that appears after selecting the red arrow on the left:

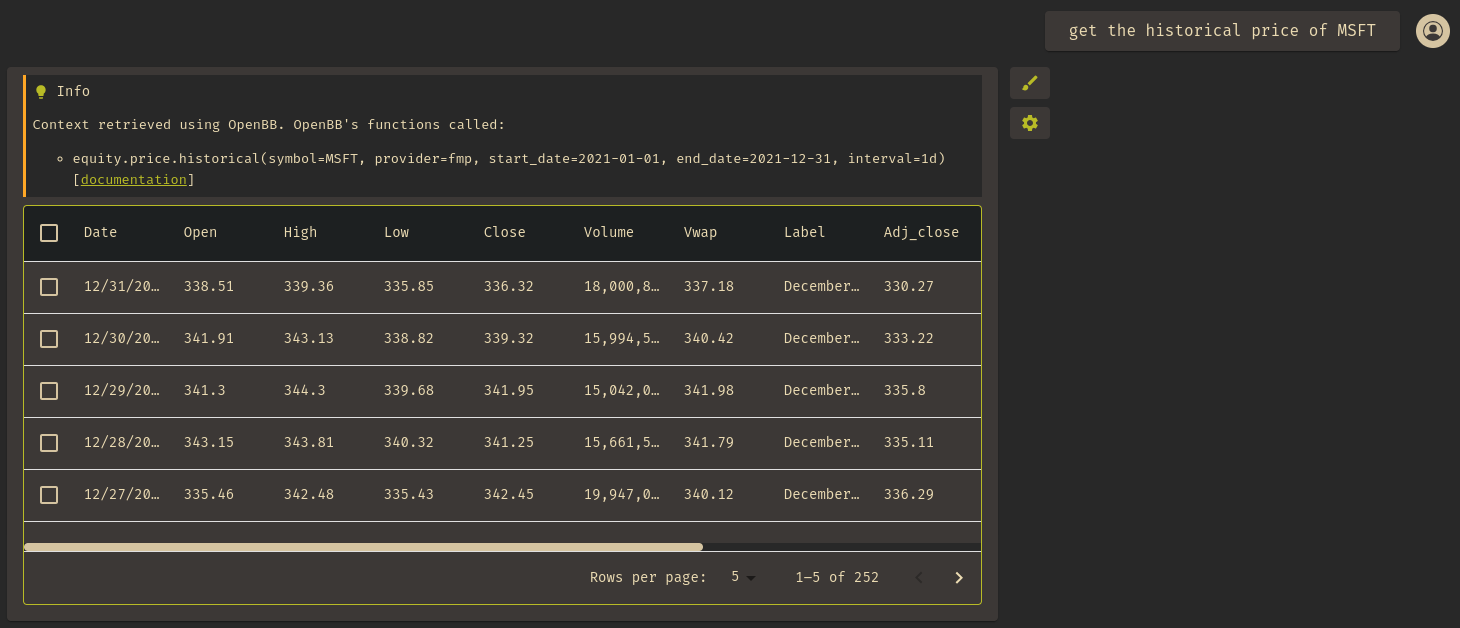

After configuring OpenBB's PAT, let's proceed to request specific data. For example, you can inquire about the historical price of MSFT using the command get the historical price of MSFT:

Awesome! The data comes back in the form of a handy table that we can effortlessly navigate, download as JSON or Excel, or unleash the magic of visualization with just a click on the brush 🖌️. And there you have it, the plot automatically generated by GPTStonks Chat CE using TradingView's Lightweight Charts 📊:

It's a seamless experience, making financial data analysis a breeze!

Benefits of using tables, candle charts and downloaded data

Having financial data presented in tables, candle charts, and JSON or Excel format offers several distinct benefits, each catering to specific aspects of analysis:

-

Tables:

- Structured Information: Tables provide a structured and organized representation of data, making it easy to read and comprehend.

- Comparative Analysis: Side-by-side comparison of different financial metrics becomes convenient, facilitating quick insights.

- Numerical Precision: Tables retain numerical precision, ensuring accurate representation without losing significant data.

-

Candle Charts:

- Visual Patterns: Candle charts offer a visual representation of price movements, making it easier to identify patterns and trends in the market.

- Historical Context: The historical context provided by candle charts aids in understanding market sentiment and potential future movements.

- Decision Support: Traders often use candle charts to make decisions based on chart patterns and technical analysis.

-

Downloaded data as Excel (or JSON):

- Custom Analysis: Excel provides a versatile platform for custom analysis, allowing users to apply formulas, create pivot tables, and conduct scenario analysis.

- Data Manipulation: Users can manipulate financial data in Excel, enabling tasks like sorting, filtering, and aggregating data for specific insights.

- Graphical Representations: Excel's charting capabilities allow for the creation of various charts and graphs, enhancing visual representation for better interpretation.

Seeking simplicity? Give GPTStonks Chat web a try!¶

We're thrilled to share that our web service is on the horizon. Forget about hardware or configurations—immerse yourself in refining your investments with top-notch information. If you're eager to get started, be sure to join the beta waitlist. Stay tuned for more updates on this exciting development!

🚨 Important Remarks¶

As we delve into the realm of open-source and local CPU RAM, here are a few considerations:

- ⚡ Speed is contingent on model size and hardware. The inclusion of GPUs, utilization of smaller models, or external providers such as OpenAI can enhance both accuracy and speed.

- 💡 Zephyr 7B Beta - GGUF requires approximately 7GB of CPU RAM.

- 🤖 Effective prompting is essential for optimal performance. Check out our

.env.templatefor prompts inspired by OpenAI's guide.

Time to explore, analyze, and thrive! 🚀✨