How Large Language Model (LLM) selection impacts inference time on consumer hardware¶

Hey finance and AI enthusiasts!

TL;DR

This blog post explores how the selection of Large Language Model (LLM) inference frameworks can impact inference time on consumer hardware, focusing on four prominent frameworks: Llama.cpp's GGUF, GPTQ (with Hugging Face's LLMs), OpenAI API, and AWS Bedrock. It delves into the quantization strategies employed by each framework, discussing their pros and cons and providing benchmark results. Llama.cpp's GGUF is highlighted for its lightweight quantization techniques, making it ideal for resource-constrained environments over CPU, although it has limited availability of pre-trained models. On the other hand, GPTQ with Hugging Face's LLMs benefits from a vast repository of pre-trained models and a robust community but may require GPUs and higher resource requirements for some models. Additionally, the post briefly touches on OpenAI API's access to advanced language models and its wide range of applications. Overall, the post aims to empower finance and AI enthusiasts in making informed decisions when selecting LLM inference frameworks for their applications.

Introduction¶

Large Language Models (LLMs) have emerged as powerful tools in natural language processing, significantly impacting applications in finance and AI. Selecting the right inference framework is pivotal to strike a balance between accuracy and speed. In this guide, we'll delve into four prominent LLM inference frameworks: Llama.cpp's GGUF, GPTQ, OpenAI API, and AWS Bedrock. We'll explore fundamental concepts, delve into explicit pros and cons, and provide benchmark results to empower you in making informed decisions.

Local LLMs with Quantization¶

LLM quantization involves reducing the precision of model weights, enabling faster inference with minimal accuracy trade-offs. Let's delve into the quantization strategies employed by each framework.

Llama.cpp's GGUF¶

Official specification of GGUF

The official specification of GGUF can be found in https://github.com/ggerganov/ggml/blob/master/docs/gguf.md.

GGUF (Generative Graph Model Universal Format) is a file format specifically designed for storing models meant for inference with the Generative Graph Model Language (GGML) and executors based on GGML. Unlike its predecessors (GGML, GGMF, and GGJT), GGUF is a binary format that prioritizes fast loading and saving of models and ease of reading. It serves as a successor to GGML, introducing changes to make the format more extensible, allowing new features to be added without breaking compatibility with existing models.

The key motivations behind GGUF include the ability for single-file deployment, extensibility, compatibility with the mmap technique for efficient loading and saving, user-friendly usage requiring minimal code, and comprehensive inclusion of all information needed for model loading within the file itself. It adopts a key-value structure for hyperparameters (metadata), enabling the addition of new metadata without compromising compatibility.

The file structure of GGUF is meticulously defined, specifying the global alignment, little-endian default (with a provision for big-endian support), and an enum for different data types. The metadata structure includes key-value pairs with types such as integers, floats, booleans, strings, arrays, and complex structures like arrays of arrays. The header provides essential information, such as magic number, version, tensor count, and metadata key-value pairs.

The standardized key-value pairs in GGUF include essential metadata like architecture type, quantization version, and alignment. Additionally, there are architecture-specific metadata for LLaMA, MPT, GPT-NeoX, GPT-J, GPT-2, Bloom, Falcon, RWKV, Whisper, LoRA, and Tokenizer. The specification also discusses the future extension of GGUF to include a computation graph, enabling executors to run models without their implementations of the architecture, and the use of a standardized naming convention for tensor names.

Pros

- Utilizes lightweight quantization techniques for efficient inference.

- Ideal for resource-constrained environments.

Cons

- Limited availability of pre-trained models compared to other frameworks.

How to use it in GPTStonks Chat CE

Download the model using huggingface-cli and include the following to your env variables (by default in .env.template):

GPTQ (with Hugging Face's LLMs)¶

Original paper

The original paper can be found in https://arxiv.org/abs/2210.17323.

The paper introduces GPTQ, a novel one-shot weight quantization method for Generative Pre-trained Transformer (GPT) models, addressing the challenge of high computational and storage costs associated with these large models. GPT models, renowned for their breakthrough performance in language modeling tasks, have massive sizes, and even inference for large models requires multiple high-performance GPUs.

Existing work on model compression has limitations in the context of GPT models due to their scale and complexity. GPTQ aims to overcome these limitations by proposing an efficient quantization method based on approximate second-order information. The method can quantize GPT models with 175 billion parameters in approximately four GPU hours, reducing the bitwidth down to 3 or 4 bits per weight. Remarkably, GPTQ achieves this with negligible accuracy degradation compared to the uncompressed baseline.

The main contributions and findings of the paper include:

-

Efficient Quantization: GPTQ is introduced as a post-training quantization method that can efficiently quantize GPT models with hundreds of billions of parameters in a few hours.

-

High Precision at Low Bitwidths: GPTQ achieves high precision even at low bitwidths, quantizing models to 3 or 4 bits per parameter without significant loss of accuracy.

-

Improved Compression Gains: GPTQ more than doubles the compression gains compared to previously proposed one-shot quantization methods. This allows the execution of a 175 billion-parameter model inside a single GPU for generative inference.

-

Extreme Quantization Regime: The paper demonstrates that GPTQ provides reasonable accuracy even in the extreme quantization regime, where models are quantized to 2-bit or ternary values.

-

End-to-End Inference Speedups: GPTQ's improvements can be leveraged for end-to-end inference speedups over FP16, achieving around 3.25x speedup with high-end GPUs (NVIDIA A100) and 4.5x speedup with more cost-effective ones (NVIDIA A6000).

-

Implementation and Availability: The authors provide an implementation of GPTQ, making it available to the community through the GitHub repository https://github.com/IST-DASLab/gptq.

-

Novelty in High Compression Rates: The paper claims to be the first to show that extremely accurate language models with hundreds of billions of parameters can be quantized to 3-4 bits per component. Previous methods were accurate only at 8 bits, and prior training-based techniques were applied to smaller models.

The authors acknowledge some limitations, such as the lack of speedups for actual multiplications due to the absence of hardware support for mixed-precision operands and the exclusion of activation quantization in the current results. Despite these limitations, the paper encourages further research in this area and aims to contribute to making large language models more accessible.

Pros

- Hugging Face offers a vast repository of pre-trained models, catering to diverse requirements.

- Benefits from a robust community with frequent updates.

Cons

- Some models may have higher resource requirements.

How to use it in GPTStonks Chat CE

Add the following to your env variables (by default in .env.template):

External LLMs via APIs¶

OpenAI¶

OpenAI API is a service provided by OpenAI that allows developers to integrate OpenAI's powerful language models into their own applications, products, or services. The API gives developers access to state-of-the-art language models, such as GPT-3, enabling them to leverage advanced natural language processing capabilities without having to train or maintain these models themselves.

Here are key points about the OpenAI API:

-

Access to Advanced Language Models: The API provides access to OpenAI's sophisticated language models, allowing developers to perform various natural language understanding and generation tasks.

-

Wide Range of Applications: Developers can use the OpenAI API for tasks such as text generation, translation, summarization, question-answering, and more. The versatility of the language models makes the API suitable for a broad spectrum of applications.

-

Integration with Custom Applications: Developers can integrate the OpenAI API into their own applications, websites, or services. This enables them to enhance the natural language capabilities of their products without the need to develop and train large-scale language models from scratch.

-

API Key Authentication: Access to the OpenAI API typically requires developers to obtain API keys. These keys serve as authentication credentials, allowing OpenAI to track usage and manage access to the API.

-

Input and Output Customization: Developers can provide input prompts to the language models through the API, influencing the generated outputs. This allows customization of the model's behavior to suit specific use cases or preferences.

-

Ongoing Improvements: OpenAI may release updates to their language models, and developers using the API can benefit from these improvements without having to retrain their own models.

Pros

- Employs state-of-the-art pre-trained models, ensuring high accuracy.

- Seamlessly integrates with OpenAI's ecosystem.

- Fast, accurate and generally reliable LLMs.

Cons

- Consideration of usage costs, especially for large-scale applications.

- Need for an account in OpenAI and an API key.

- Closed source, so there is little information about the models' architectures.

How to use it in GPTStonks Chat CE

Add the following to your env variables (by default in .env.template):

AWS Bedrock¶

Amazon Bedrock is a fully managed service offered by AWS that provides a range of capabilities for building generative AI applications. It includes high-performing foundation models (FMs) from leading AI companies, such as AI21 Labs, Anthropic, Cohere, Stability AI, and Amazon. Amazon Bedrock allows developers to experiment with various FMs, customize them with techniques like fine-tuning and retrieval-augmented generation (RAG), and create managed agents for complex business tasks, all without writing code.

Key features and capabilities of Amazon Bedrock include:

-

Model Availability and Integration: Amazon Bedrock offers a choice of foundation models that users can access through the AWS Management Console, AWS SDKs, and open-source frameworks. Models from providers like Meta's Llama 2 with 13B and 70B parameters are also becoming available.

-

No-Code Development: Developers can build generative AI applications without writing code. The service supports experimentation with different FMs, customization with private data, and the creation of managed agents for specific tasks.

-

Serverless and Integrated with AWS: Amazon Bedrock is serverless, eliminating the need for users to manage infrastructure. It seamlessly integrates with AWS services like CloudWatch and CloudTrail for monitoring, governance, and auditing purposes.

-

Privacy and Security: Data privacy is maintained, and users have control over their data. Amazon Bedrock encrypts data in transit and at rest, and users can configure their AWS accounts and Virtual Private Clouds (VPCs) for secure connectivity.

-

Governance and Monitoring: The service integrates with AWS Identity and Access Management (IAM) for managing permissions. It logs all activity to CloudTrail, and CloudWatch provides metrics for tracking usage, cost, and performance.

-

Flexible Interaction: Users can interact with Amazon Bedrock through the AWS Management Console, SDKs, and open-source frameworks. The service offers chat, text, and image model playgrounds for experimentation with different models.

-

API Access: Developers can use the Amazon Bedrock API to interact with foundation models. The API allows users to list available models, run inference requests, and work with streaming responses for interactive applications.

-

Billing and Pricing Models: Amazon Bedrock offers billing based on processed input and output tokens, with different pricing models such as on-demand and provisioned throughput.

Pros

- Provides scalable infrastructure for handling large workloads.

- Integration with other AWS services for enhanced functionality.

- Fast, accurate and generally reliable LLMs.

Cons

- Consideration of usage costs, especially for large-scale applications.

- Involves a steeper learning curve for users unfamiliar with AWS services.

- Need for an account in AWS and extra configuration.

- Some of the best models (like Anthropic's models) are closed source.

How to use it in GPTStonks Chat CE

Add the following to your env variables (by default in .env.template):

Benchmark¶

Model selection¶

In this benchmark, we aim to assess the strengths and weaknesses of various inference frameworks on GPTStonks Chat CE. The models were selected with careful consideration of the following factors:

-

GPTQ: Considering that consumer hardware often has less than 6GB of GPU VRAM, we opted for a NVIDIA GeForce GTX 1650 Ti with 4GB of VRAM. Consequently, the largest GPTQ model in use has around 3 billion parameters. After experimenting with different models, we found that daedalus314/Marx-3B-V2-GPTQ, which was quantized at the beginning of GPTStonks, performed well. However, it's worth noting that 3 billion-parameter models may not be powerful enough for an agent mode.

-

Llama.cpp: Considering that CPU RAM in most consumer laptops ranges from 8 to 16GB, we selected a model with approximately 7 billion parameters. Among the options,

Zephyr 7B Betahas proven to be one of the most effective. You can find its GGUF version here. -

OpenAI API: At the time of writing this article,

GPT-3.5-Turbo-1106is the latest GPT-3.5 version. It offers a good balance between quality and price, making it suitable for a wide range of use cases. -

AWS Bedrock: Anthropic, available through AWS Bedrock, is considered one of the most advanced providers of foundation models and a significant competitor to OpenAI. The

Claude Instant v1model from Anthropic is a commendable choice, striking a balance between speed, price, and accuracy.

Results¶

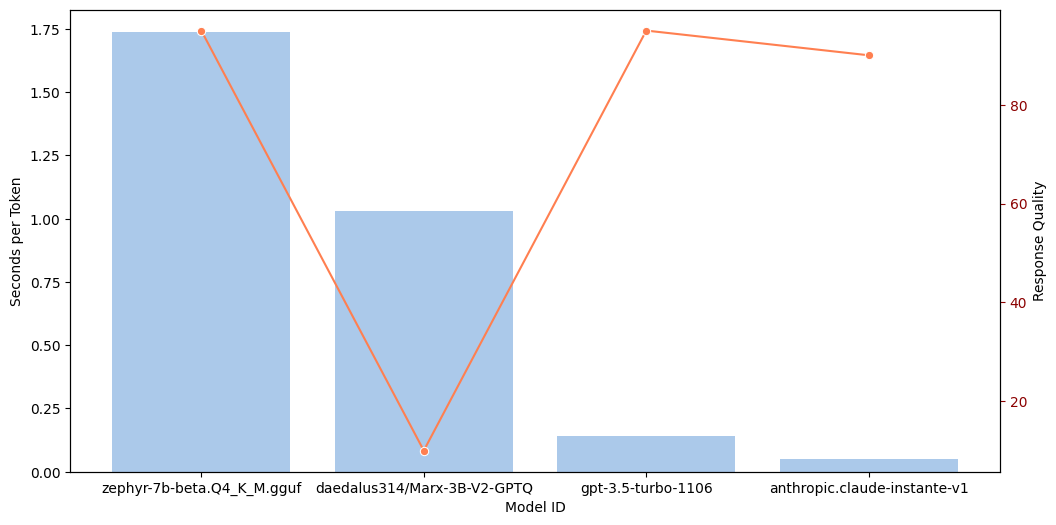

To provide a practical understanding of the frameworks' performance, we conducted a benchmark test measuring both inference time and response quality. The following detailed results shed light on their comparative strengths, where the response quality is evaluated with GPT-4:

| Framework and model ID | Inference Time (sec/token) | Response quality (%) |

|---|---|---|

| Llama.cpp: zephyr-7b-beta.Q4_K_M.gguf | 1.74 | 95 |

| GPTQ: daedalus314/Marx-3B-V2-GPTQ | 1.03 | 10 |

| OpenAI API: gpt-3.5-turbo-1106 | 0.14 | 95 |

| AWS Bedrock: anthropic.claude-instante-v1 | 0.05 | 90 |

Below is a visual representation of the inference time and response quality for each model and framework:

The graph shows that closed models are significantly faster than local models when dealing with limited resources, even when employing quantization techniques and consumer GPUs. It's worth mentioning that these observations might not apply universally to open-source models running on high-end servers. In terms of response quality, similar results can be obtained with both open and closed models, but at the cost of speed or expensive hardware (Zephyr 7B Beta would consume around 7GB of GPU VRAM). From a user's point of view, utilizing external models can result in quicker and more accurate responses, though it does come with considerations such as API service usage and a balance between privacy and autonomy.

Conclusion¶

In summary, exploring the world of LLM inference frameworks requires careful consideration of quantization techniques, inference procedures, and sampling methods. The benchmark results indicate that closed models tend to excel over local models, especially in scenarios with limited resources, even when employing quantization techniques and consumer GPUs. It's important to note, however, that these findings may not be universally applicable to open-source models on high-end servers.

From a user perspective, tapping into external models can offer quicker and more accurate responses. Yet, it comes with considerations such as API service usage and a trade-off in privacy and autonomy. By grasping these key concepts and weighing the pros and cons of different frameworks, users can confidently choose the LLM solution that aligns best with their needs.

Stay curious and happy coding!